Minutes

Date: 10th April, 2019

Attendees:

- Aidan Heerdegen (AH) CLEX, Andrew Kiss (AK) COSIMA, ANU

- Marshall Ward (MW) GFDL

- Russ Fiedler (RF), Matt Chamberlain(MC) CSIRO Hobart

- Nic Hannah (Double Precision)

COSIMA

Consortium for Ocean-Sea Ice Modelling in Australia

Date: 10th April, 2019

Attendees:

Date: 12th March, 2019

Attendees:

MW: Submitted parallel IO FMS patch. New automake made PR more complicated. FMS now buildable by automake. If we add new files/dependency build will fail. Not very auto. Works well. Some tuning with lustre. Just need a good io_layout with large contiguous chunks.

AH: Compression? MW: IO runtimes double with compression. Testing some of the newer algos and getting better numbers. AH: How does he get other compression into netCDF? MW: Custom libraries. Will make accessible when time comes. Have been using it. Good. MW: Haven’t heard back from GFDL yet. AH: will be needed for further high res models.

Date: 14th February, 2019

Attendees:

New:

Existing:

Date: 15th January, 2019

Attendees:

PD: Stopping an 18 year run with harmonised code. Seems successful. Not losing summer Antarctic sea ice, which had been an issue. Dave Bi gave his approval.

PD: Looking at new bug fixes on GitHub. Didn’t appear to be in the CSIRO code.

RF: The fixes I added weren’t to do with harmonisation. Diagnostic for transport on density levels. PD: Won’t affect our model run? RF: Yes. PD: Maybe should keep the 18 year run going. RF: There is also a fix to a submeso scale smoothing that you’re probably not using. 99% likely you’re not using that. PD: Could check by looking at the namelist? RF: It’s smooth hblt, or something, but also a note in the code specifying the namelist that shouldn’t be used because of this error. PD: Could you send me the namelist value? RF: Should be in GitHub issue/pull-request. AH: I’ll put links from your commits on to the slack channel.

https://github.com/mom-ocean/MOM5/commit/11f06f989645b1b21aa990ade61440976451bbb6

https://github.com/mom-ocean/MOM5/commit/06a6d0afb55a1f188d0b58b89513d646d47f062f

AH: hblt_smooth

RF: If you applied the smoothing could smooth into rock. PD: Keep getting current going? Want to get an ENSO spectrum. RF: Shouldn’t make a difference, but will get noise due to changes in red sea fix. Statistics will be the same

AH: In the release candidate code we reproduced a red sea fix timing bug to make the comparison as clean as possible, using a namelist option. That has been stripped out before merging into the master branch. If you continue with this test run and it becomes your spin up run then you will not be able to do a clean comparison if you then change something else from the master branch version. Is this a test run or will it become a spin up?

PD: Sometimes test runs become real runs, but I’ll say this is a test.

AH: If at any point you start a spin up you need to be on a commit on the master branch. Currently running from a commit on a pull request that no longer exists. There is no comparable commit on the MOM master branch repo because of removing the salinity time unfix option, and merging in RF’s bug fixes.

NH: Second that. Also important if we want to continue to be harmonised, this is a divergence and if we carry on with that we’re diverging immediately.

PD: Alright. Will leave this run going to test ENSO spectrum, but will start a new parallel run for safety. Don’t want to be in the position where the test run gets turned into a spin-up because of lack of time.

AH: Calendar time not compute time is your constraint? PD: Yes. AH: Definitely agree with that strategy. PD: Never have enough compute time, but always have that trade off.

AH: Will tag the code with CM2 version which can use to identify the code. PD: Should tag straight away and I will clone and let people know this is the correct code.

NH: Reproducibility is important, and the current MOM code does not reproduce. PD: On restarts. NH: Yes, so would be a shame to lose that by not using the merged code.

AH: Not only is it reproducible, but NH is running tests for this. NH: That’s right. Just a simple 2 day versus 2×1 day runs. To do that test presently turns off red sea fix. Now I can turn it back on? RF: It should now reproduce. AH: Turn it back on and check it reproduces? AH: This is reproducible between runs, not necessarily reproducible to before RF’s fix. PD: Which is reproducible on restarts and which isn’t? AH: Current harmonised code in the MOM5 master is reproducible between restarts, the code from the pull request that PD is currently running is potentially reproducible if you turn off the flag we introduced which emulated the incorrect behaviour of the old MOM5 CM2 code for testing purposes. PD: So the harmonised code in the pull request is different to the master branch? AH: Yes, in that the hack to emulate the incorrect timing behaviour of the red sea fix has been removed, and a couple of RF’s bug fixes added. PD: Going forward will there be a harmonised branch and a main branch? AH: No, there will be a tag identifying where you get your code from. If you need to pull in updates but don’t want all the updates in master, then you might start a new branch, and cherry pick those updates. At that point you might have a different branch, but not currently. In general better off not having a separate branch, as it just starts diverging again. I don’t know how you guys work and I’m guessing you don’t want code changes, but if, for example, you just wanted to add code changes that added diagnostics, you could add those in, and have something to compare against. PD: Just trying to figure out how it will work, if there are different branches, and if down the track we want to develop the harmonised code again. AH: Yes, and if you have some testing then you an add code and test to see if there are differences in the output, and so add code changes with confidence.

MW: Highlights that we have not been tagging MOM for some time. Maybe we need more regular tagging.

PD: In an ESM meeting on Friday Tilo said it was too late to include changed code for CMIP6.

AH: Whatever we did he wouldn’t put it in CMIP6? PD: Yes. AH: Invested too much time on spin ups? PD: Yes. AH: So no particular rush to do this. PD: I thought it was good to pass that on. AH: Good to know, thanks. MW: There ESM for CMIP6, but also in the CoE. Will they use what you’re working on? AH: Yes, but shame, as we’re not then using the same code as the CMIP6 submission. MW: I thought that was your interest in ESM. AH: Yes, not sure.

MW: Not sure how much we can do without Steve being present, or anyone from GFDL.

AH: Made some notes, hoped to get some feedback and others make changes, additions. MW did add some useful points about defining domain experts.

AH: The MOM5 repository and community is not welcoming to those outside the current clique of COSIMA. Pull requests languish for years without attention because it is no-one’s responsibility, no-one has a defined role. Even if some people put their hands up to monitor pull requests.

Have a contributing.md, to tell people how to contribute to the code. There are users outside this group who use it, see them on the MOM users mailing list, and can use that channel to advertise it.

MW: That was my experience as a grad student. Not sure who is charge of the code. Tried to contribute code and found it intimidating. Not a fan of overly prescriptive instructions on how pull requests must look, or how code must be written. That has made me less likely to report bugs. Would rather get bad contributions than no contributions. AH: Poor quality contributions require effort from us to work through them. MW: Yes, just want to make sure they know it is ok to screw-up. A lot of projects require a lot of environment information etc, which can be onerous for new users. Now tend to ignore those instructions and wait for devs to ask for it. Do think that governance model is good, but want to encourage contributions and emphasise it is the effort that matters rather than the quality of the contribution.

NH: I think I agree. Want to be more friendly to outsiders, also agree with MW, want to put as few roadblocks as possible. People have little incentive to contribute to the repository. The harder we make it, the less likely they are to contribute. With Pull Requests, in order to merge we need testing, but people aren’t going to do those tests. They test for themselves to satisfy their scientific goals and that’s it. Not sure how to reconcile that.

MW: Automated code coverage tests help. A lot of CI services panic too much when code coverage drops after a contribution. This can be useful if it then spurs contributors to improve units tests to improve that metric. AH: Currently have 0% code coverage, so it can’t get worse!

MW: Yes, one issue is we have no code coverage presently. But looking more broadly if we have some automated tests that can tell us what is broken that can help a lot. I know we have testing. AH: Only compilation testing.

AH: If we do this, it will be a burden, want to minimise this, hence the document defined some steps for assessing a PR:

AH:

MW: James Munroe has experience contributing to dask, managed by experienced software engineers. He had to do a lot of testing, as well as style and engineering changes. They had someone asking him to make changes until it was deemed good enough. We could do this too, but it would require engagement from us. Also, are we overthinking this? We hardly get any pull requests.

AH: Yes, but some of this stuff is useful for us to do too. A lot of it is just good communication. It may sound onerous, but in many ways it makes it easier, so people aren’t trying to guess the right thing to do, e.g. with code style it may be as simple as pointing to a particularly well written section/module and say “seek to emulate this”

MW: GFDL is struggling with this right now. I sent them a beefy FMS patch and they did not how to handle it. They didn’t want it, but they didn’t want to just reject it. Might be worth figuring out how they are dealing with it. AH: They might copy what we end up doing. MW: Yes, they might end up copying this.

AH: First up, should we consider roles, e.g.

Contributors: Anyone who wants to contribute code

Committer: Anyone with commit access

Maintainers: Committers who check PRs, assign PRs to other committers and maybe do some other admin tasks.

Admins: Do we need another layer? Currently only Steve, Nic and I are Organisation Owners and Admins on the MOM5 repo. Need at least 2 admins at all times (run over by bus scenario).

Sponsoring institutions: Acknowledge role of institutions which provide time for code development?

BDFL: Steve? (Does he even want to be a Benevolent Dictator?)

AH: Do we want to do something like this? NH: Yes. It is a great idea. Write it down and we can use it ourselves, and our collaborators.

AH: Are we happy with the roles? NH: Yes, need to look more closely. Looks good. Keep it simple.

MW: Maintainers should be admins, at least for now. I wonder if Steve would prefer to not have a formal role, as he is transitioning out of the coding. AH: Would be want to be BDFL? MW: Would probably accept it, but maybe he doesn’t want to

AH: I think it is good to keep him there. At some point we will transition to MOM6 and it will no longer be my role to look after this. Others will move on too, so it would be good to have Steve still there in that eventuality.

MW: He could be a decider. AH: Yes, like having a Queen. Just have to host him in visits every now and then. MW: Head of State, but not the Head of Government.

AH: Ok, get rid of admin/maintainer distinction. Just make them Maintainers.

MW: Sponsoring institutions is interesting. AH: It was to acknowledge that institutions that pay people like me might have a reasonable expectation that the people they pay to help maintain the code could have some say in the way it is run. RF: I don’t like that, it is a community code. MW: I agree with RF, but there is some reality. AH: More about expectations. If I leave my job, the CoE might have some expectation that my replacement would be able to become a committer/admin. MW: I worry about sponsoring institutions insisting on having some oversight. AH: I always thought CSIRO was a bit keener on the whole “official stamp of approval” thing, but if we don’t like it I’ll get rid of it. No worries.

AH: Ok. Lets codify the roles, and look into a contributing.md to sit at the top of the repo to tell people how to contribute code. This all started with wanting to have timely responses to Pull Requests. Who wants to be what?

MW: What is the

NH: Happy to be a maintainer, but COSIMA would have to pay for my time. AH:

MW: Don’t have an explicit list of users.

RF: How far back do you go with sponsoring institutions? Back to 2009?

MW: I would prefer not to involve them.

RF: Just leave it. Keep it simpler.

MW: When I say sponsor, I mean someone who can’t function without this code. Need a maintainer.

AH: It is literally my job, and happy to volunteer as a maintainer.

AH: Maintainers check PRs, and then assign them to people, we need others to be willing to have a PR assigned to them. MW: Happy to do it, but feel like others are more invested. AH: Doesn’t have to be just you. It is just having someone respond to a PR, check progress, feedback what is required to get it merged, close it if it doesn’t meet standards and the submitted doesn’t respond to queries to fix it.

MW: Yes we need that role, at the moment it is AH and NH. If you want to add me I’m happy to do it, just not sure where I will be in the near future.

AH: Ok how do people get these roles? Commit access is currently Steve, NH and AH. Do other people need it, or want it. How do we decide who should have it?

MW: Best to have one person responsible for commits/merges. AH: Steve recently merged a commit that needed to be reverted. MW: Which is what prompted this. AH: Yes, and he wouldn’t have felt the need to do it if he knew it was being handled.

AH: Do we need to do this now? Specify who is on “the committee”?

MW: Contributor is obvious. Pull committers from contributors. Pull maintainers from committers. If you don’t get enough commits, then a project just dies. AH: Don’t need to codify how someone becomes a committer? MW: No. We’re too small. Look on the issues, and PRs, represents very few people. AH: Ok, so no need right now.

MW: Quite premature to codify this.

AH: Need a `contributing.md`, how to contribute.

MW: Any role for governance is getting the word out. Find the user base. There are a lot of MOM users out there. Steve cited a thousand MOM users in 2010. Governance should be about where are these people, and then get the community. AH: You run the danger of “hey guys come and contribute” and not have processes in place. MW: A good problem to have. AH: What happens if you got 10 PRs tomorrow what would you do with them? MW: Deal with them one at a time. AH: But who would deal with it? We have existing PRs that people aren’t being dealt with, one has been there for a year. MW: Yeah, but it is a bit stupid. Changed an integer to an 8-byte. RF: I would have rejected it because it used a specific kind. Change was a good idea, but badly implemented. MW: Ok, let’s look at this and learn some lessons and produce a document.

MW: So lets look at this PR. What are we going to do about it? So who do we assign this to? I would assign to Russ as he has expressed a strong opinion. AH: Ok. MW: What would you say about this PR RF? RF: I would split into two, as they are unrelated. If you do submit these things, try and keep to individual issues. Specifically about this, first change is non-portable, which can easily be fixed. Would we do the fix, or would he do the fix? All it needs is a selected_integer_kind. MW: This is a portability issue. RF: Yes, anything from now on that gets contributed should be standard. MW: Lesson should be an evolving document about what we want. RF: Yes, standards compliant, independent commits. MW: Would you be ok with being assigned this? AH: Already done it. RF: Second part could be a real bug in remapping vectors. Maybe get back to him and ask if he can show a test case. AH: Ideally this would be associated with an Issue which explains the problem. AK: Really ideally would include a test case that fails and that subsequently passes with the fix in the PR. MW: I would argue against pedantry. GitHub treats Issues and PRs similarly, so while an Issue with a test would be ideal, shouldn’t be mandatory. If you would rather formalise then fine.

RF: Have to leave

Subsequent discussion:

AK: Who feels they have responsibility, so things don’t languish, and who has authority to say what is going to happen. NH: And who has time. MW: Good not to dump maintainer responsibilities on NH.

AH: If suggestion is prescriptive so as not to waste time wondering how to do things. Not mandatory, but good to give guidance.

MW: Current touchy point is assigning stuff to RF. Had a meeting and asked it was ok, but won’t scale. Let’s clean up the pull requests.

MW: I have different idea of purpose of Issues page to AH for payu. You see as dumping idea for ideas (AH: Yes), and I see it as something to keep as small as possible. Ok for a small group, but with a larger group it can balloon and lose track of good ideas. MW: Yes bad idea, or not enough time should be a criteria for closing an issue, without losing the idea. Would be great to get issues down to 1-2 pages, would reveal a lot of lessons.

AK: Paul’s PR is pretty substantial. A whole new file, ocean_basal_tracer. MW: But bungled the build script. Also made a timer that does nothing. This is a good issue with a terrible title. This is a good example.

MW: These projects work best when they’re community driven, that we agree to do it. AH: If you put up your hand to be assigned stuff, you will get assigned stuff. If they don’t, then people won’t. AK: If someone has that role you feel ok assigning to them.

MW: When we talk about governance, lets not worry about users, lets figure out how to assign issues, that is a great place to start. Not worry so much about how users should make PRs.

NH: I agree, but maybe still work on guidelines for contributors. There was a document called “How to contribute”, based on technical specifications. It was a markdown doc in the original website. AH: Maybe that exists somewhere. NH: It’ll be around somewhere, outlines how to do a PR and things like that. AH: Should definitely start with that.

MW: Make it a goal this week to deal with those two PRs. Assign me Paul’s if you want.

AH: Willing to be a maintainer? NH: Yes, I like being part of that community. Hard to know how to dedicate time to it sometimes. We need to do a certain amount of maintenance. Make think of it as pro-bono professional work. Matt England is keen on that sort of stuff, he might be willing to support it/sanction it/pay for it.

Agreed: Maintainers: Aidan, Nic, Marshall. Ask Steve if willing to have BDFL status.

AK: Modified topography to remove terraces (bug). Smoothed a seamount near Sverny Island, and eliminated very small cells near tripoles. 54KSU / model year. This is repeat year forcing. Time step is 720s. Did a test run with 900s and it crashed. 720s is a factor of 1800 (coupling time step). No factors in between.

AK: MOM was going unstable, generating 18 m/s velocities, CICE was the first thing to crash with ice remapping error. Was using ndtd (number of CICE time steps per MOM baroclinic timestep). When using ntdt=3, making CICE stable with vaguely unstable MOM. At one point in the run I added Rayleigh damping in Kara Strait as I had done in IAF run. MOM was going unstable in Kara Strait, moving ICE around too quickly, and CICE crashed. Once I stabilised MOM, could get away with ndtd=2, so now the model is CICE bound rather than MOM bound. AH: What is runtime? AK: 2 hours, so can do 2 months/submit. MW: What were the fails at the beginning? Related? AK: Showing out from a run-summarising tool. Just shows when runs failed. Not necessarily crashes, might have just stopped to change something. AH: So when you say MOM went unstable, not so unstable it crashed, but unphysical velocities? AK: Haven’t looked in detail, but imagine it would be grid scale alternation and that sort of thing. AH: Does that mean, in order to compatible with CICE, maybe we need to reduce some of MOM’s thresholds for warnings so they are better matched? AK: Yes. CICE has a CFL condition that is tighter than MOM. MW: They are solving different equations. AH: Not saying they should, or could be, exactly the same, but MOM is more permissive than CICE, but the crashes happen in CICE. So if you reduce the limits in MOM will diagnose the problem in the correct place, rather than indirectly through CICE crashing. A new user would then get the right information.

AK: Haven’t examined the fields. It is right on the limit of the barotropic CFL limit. Splitting factor of 80. The outputs show it is right on the limit. MW: Griffies would hate that. AH: Pretty routine to change that when timestep changes. AK: Had to go 100 for dt=900s for it to pass that check. Might be wise to up that number.

MW: This is tenth degree? Really dropped your CPU count. AK: This is a minimal config. Dropped from 6K to 2K, getting same throughput as larger model. MW: But with lower ndtd and higher timestep. AK: Yes. AH: timestep is like a superpower.

NH: Great! Well done. AK: Thanks for your work. On the number of CPUs, it is good to run minimal configs, as they are inherently more load balanced. Each CPU is doing more work and a greater variety of work. A good reason this minimal config is more efficient. MW: Won’t it run slower if you reduced timestep? Doesn’t make it more efficient necessarily? NH: I think it does.

NH: How does this compare to MOM-SIS? We can’t really compare without running with the same grid? MW: Can usually use the MOM timing and add 15%. I went back and checked this numbers. AH: I think I was getting six months / submit with MOM-SIS at a nominal 7200 core layout, with dt=600s. The larger ACCESS-OM2 config has 6K cores in total, but only 4300 MOM cores.

PD: Have to go now. Bye.

NH: Are you going to try getting the bigger config running? AH: 6 months per submit is very interesting. Could do some decent 100 year runs. Could be worth looking at.

MW: According to the numbers I have been posting, could speed MOM up by a factor of 4 with more cores. CICE ice step is also scaling well. Don’t know if switched to sectrobin, or missing a coupling bottleneck. This model could scale well. Has anyone checked with sectrobin? NH: This is using it. MW: I am talking about much larger, I get improvement up to 12K CICE cores. AH: Load balancing not an issue? MW: Maybe, but I’m not seeing it with the function I am testing. Is this new? Or was this always the case and I’m not seeing what slows the model down? If it is new, maybe we should experiment with throwing more cores at CICE. Even with the low config, maybe you could ramp the CICE cores up to 600? NH: That one is MOM bound. AK: The IAF was using round robin, and dt=450s. MW: Right so, that is your difference right there. AK: Dropping ndtd to 2 makes it MOM bound.

NH: Let me know if there is anything I can do to improve the load balancing in larger config. Pretty sure there is larger config using sectrobin.

MW: I’ve seen consistent improvements in all CICE configs, including 1 deg. Doubling cpus in CICE and MOM individually in 1 degree saw improvement in both, but got errors when I tried to both. Gave up because 1 degree not so important. Will try again in tenth degree.

NH: Sounds like you’re discovering what the best config should be using some evidence based mechanism. The 1 degree config was pulling numbers out of hat that balanced ok with decent efficiency. Maybe want a small/medium/large config, but not for all resolutions. 1 degree maybe just want max throughput. Would be great to capture what you’ve discovered, and actually start to create those configurations.

MW: MOM numbers are clear. Time per step is very informative. Want numbers around 0.1s/step. Allows us to tackle this independent of time step. Surprised CICE is scaling. Reminds me of the last COSIMA talk which everyone said was wrong because I didn’t have enough ice. Will make a figure, which will show strong scaling numbers for CICE. Looks like it a strong scaling model.

AK: On CICE, there is a workshop in Hobart in late Feb. It is a CICE modelling workshop, and Elizabeth Hunke is visiting. AH: Are they up to CICE6? MW: Dealing with a fork. Los Alamos has a version no-one is allowed to see. NH: Worth looking at the CICE repo. Our CICE5 version contains all changes up to CICE6 tag, We’re as up to date as you can be on CICE5. AH: Have you back-ported stuff? NH: Yes, it wasn’t very hard. This is CICE6, but it has CICE5 in the repo. AH: They have a cice6 branch. MW: What is ice-pack? NH: They have split out the column physics to make it more portable. It is just code from CICE5 but repackaged. AH: Icepack is included in CICE6 as a submodule.

AH: Ruth has been having queuing issues, anyone else having problems? Might just be someone using broadwell heavily. Do you know if we have to use broadwell for minimal? NH: My runs used to crash. AK: My runs are ok normal. Running the same as Ruth. AH: Using less than 1GB/processor? NH: I put something in config.yaml so when running in normal it used the high memory nodes. Did you take that out? AK: Yes I removed that. NH: Who knows. So much has changed since then. Memory can spike.AK: Ruth should be able to run on normal without requesting extra memory. MW: What made you think it was a memory issue? NH: Crashed at a particular place on initialisation in MOM where I know there is a big jump in memory usage. I have made an issue about it. It is in FMS. As soon as I went to the high memory node and it went away. Doesn’t print out specific memory errors, but as soon I as I increased memory it ran fine. Good to go back if it has gone away. It is something that gets done on the MOM root PE on initialisation that increase memory usage a lot. AH: Shame we no longer have access to the PBS logs as there was a lot of information in there on this sort of thing.

MW: Intel 19 gives awesome error messages. Not only are tracebacks more readable, but they give 7 lines of code each side of the error. Now getting MPI trackbacks without even using mpi-debug. Can’t use openmpi/1.10 with Intel 19.

AH: To use extra time at the end of last quarter suggested we could do a parameter sweep, which would use up a lot of compute time in short order. It didn’t end up happening, but Andy Hogg thought it would be a good capability to develop in case we did want to deploy it. I understand AK has done this a lot? AK: Well sweeps of 2 parameters, yes.

AH: Not a big deal, using payu, maybe have a YaML file specifying parameters and how to sweep them, create a bunch of payu configs programmatically and run them. MW: Always wanted to add this a a feature in payu. Spin off 20 runs with one file. AH: Any particular ideas, or anyone want to do it, feel free, otherwise will go on my very long to-do list. NH: I couldn’t think of anything. I don’t know enough about the science. AH: Me too. I have no idea what parameters I would want to change, so that is where it stopped. The parameter search could mean you always have something to run if there is spare time and add it to the database. If you have a database of runs, you effectively have a parameter search if you change anything.

AK: Have a current grant to do a study on parameter sensitivity in CICE. This capability could be useful.

NH: I have written something which can divide your grid by an integer number, creates initial conditions and everything. Could be interesting to programatically create a bunch of configs and run them with something like this.

Date: 11th December 2018

Attendees:

MW: Been profiling CICE, score-p profiling doesn’t work. Been timing by time step. Anomalously long time spent at step 72. AH: could it be atmosphere being updated. JRA55 is 3 hourly. Not sure timestep. MW: Seem to have lost my logs. Not sure best way to handle it.

AH: Peter has been testing release candidate. Russ supplied a diag_table which just outputs fields for first 2 time steps which is really good for seeing code issues. Russ found some bugs introduced by me. A couple of logic errors with preprocessor flags and omission of a couple of lines that got lost in translation. Confident latest update has squashed all the bugs. MW: Not old bugs? AH: Did find some old issues. Russ found a stuffed iceberg file. RF: Not related, but is something they were using for CMIP6. AH: Did find some old bugs, had to emulate the lack of reproducibility from a the readsea salinity fix timing bug to be able to closely reproduce CM2 output. Put a flag in to do the wrong thing to do the same as theirs, will remove before merging. MW: I thought reds fix had been changed to be faster but not reproducible. RF: That’s right, but not issue. This has to do with timing. Aidan fixed it, but not compatible with what they are using. AH: Just need something that reproduces CM2 output.

Narrator: The new way of doing salt fix will reproduce over time steps, but is not bit reproducible with the old algorithm. Don’t see that effect in these tests.

AH: Peter has a test suite which is old CM2, and a copy which uses updated MOM. He compiles the new code manually and runs the two suites side by side. Both use Russ’ diag_table. Just find out which fields don’t match. Most are the same, few different, seem to be affected by the same issue. Once we’re good for a few time steps then maybe look at them after a few months RF: Once chaos starts, hard to say. As long as nothing gross happening. Unless there is something further on with coupling. AH: Yes, look after a month and check it looks close. MW: Not trying to be bit reproducible? AH: Just want to fix my bugs. RF: Make sure you’re getting the same forcing fields. Can see out in the open ocean hardly any change. Just noise. This means we’re close. Saw the outline of where the forcing field is supposed to be. The bug in the forcing field data showed up, which indicated the issue. AH: Once we’ve confirmed fixed, will merge PR and then move on to ESM.

MW: Will the CM2 code remain in step with the MOM5 code? RF: CSIRO Aspendale not doing much code development at the moment. AH: Peter is pulling directly from his GitHub repo, but once it is harmonised they will pull directly from the MOM5 repo. They will want to have a tag and pull from the tag. RF: Yes they will want frozen versions. AH: Should have some automated tested, if we find a bug, should be able to updated CM2 code and confirm doesn’t change important answers.

AH: Short answer: Lots of progress. I made lots of bugs and Russ found them. Thanks Russ. NH: Yes thanks Russ.

Date: 13th November 2018

Attendees:

MW: payu is now python3 compatible. Can be run from a .local install. No longer uses modules. Tagged 0.11.1. AH: will also install into conda environment on raijin. NH: sounds great. Have used in conda python27 environment. Good to have python3.

MW: Want to get this to a position where I can leave it to others to support. Might get GFDL interested in it. Have time to wrap up a lot of things.

AK: How rigid is the 3 digit output in archives? MW: Only a print statement. Should work with higher numbers. AH: Won’t list nicely. MW: Had meant to add a format option.

MW: Try out the new payu, want to make it the new one.

AH: Peter Dobrohotoff sends his apologies, cannot make meeting.

AH: Do we need to decide on a new chairman? MW: Happy to resign straight away. MW: Considering going to GFDL in February to bootstrap remote working. AH: Nic did you want to take over? NH: No, convinced it’s not a good idea. AH: Sort something out next meeting. Last one of the year?

AH: Peter Dobrohotoff sends his apologies, cannot make meeting.

AH: Shame, as PD tested harmonised MOM5 but used incorrect namelist options. Losing some momentum as would like to have that checked off so we could start harmonised ESM.

MC: Wrong namelist options? RF: Didn’t have correct namelist options to include my new mixing scheme.

AH: They were also concerned about background mixing, in case that as having an effect. Could point them to the relevant PR/Issue on GitHub with all the plots and documentation showing it was working correctly.This is a valuable resource and a good way of working.

AH: Richard Matear contacted me and wanted to know status of ESM harmonisation and what he could do to help it progress.

ESM is using an version of MOM5 updated to the beginning of the year with WOMBAT added by MC. It was decided to not continue with ESM harmonisation until CM2 bedded down, as it requires some of the code changes from the CM2 harmonisation.

MW: Is cylc suite used for testing using the MOM repo compilation? AH: I believe Peter is currently turning off the automatic compilation in the suite and using the repo compilation script on the command line to create the MOM executable. MW: I think improving/streamlining and harmonising the cylc suite is as important as harmonising the code. AH: I would have liked a test suite that incorporates this, but this is the way Peter has been comfortable working. Can’t progress ESM until have the all clear that CM2 is working correctly.

MW: Richard & Matt are using the ESM model? Not using GFDL stack? MC: GFDL in decadal project. This harmonisation will get WOMBAT in the MOM5 master branch, which has long been a goal. Decadal project not using UM. Research effort there is data assimilation and reanalysis that they’re running, rather than updating the model. Won’t hear much from them. When AH gets the WOMBAT code in, please contact us. Have experience using payu runs at 0.25 deg. Will fix you up with files and help when it comes to testing. AH: I will mostly be relying on others to run stuff. MC: Not running under payu currently. Out of the loop at the moment. Did run one of Kial’s runs. I need help running with payu. AH: Yes we can do that together. Holger is trying to get payu to run with ESM, making slow progress. MW: Who is supporting the ESM? Surprised Richard will contact AH. AH: Someone told him it was part of this code harmonisation. MC: Richard’s interest is WOMBAT in MOM5. AH: ESM will be the CLEX coupled model. MW: Who will be responsible for ESM? AH: Tilo is doing a CMIP6 submission with ESM. CLEX will be wanting to use all of Tilo’s runs to spin off their own experiments. At that point CLEX CMS will support the model with payu on NCI HPC systems. MC: Tilo is doing the work of the equivalent of the entire CM2 team to get ESM working. We support Tilo somewhat, and there are others who are no longer formally part of the team but contribute. Richard has interests in this space also. AH: Tilo can benefit from work we’re doing. Scott found a 10% improvement in UM speed. MC: Speed/efficiency not a priority right now. Focus on land model, forcing etc. Not #1 priority. AH: If they’re open to that input, they can still get the benefit even if it isn’t their focus.

MC: Have to go to another meeting.

AH: Do we need to move the meeting? MW: Happy to move to another day if it works for people. AH: Doodle poll? RF: Next year MW: Ok, do something next year.

AH: Working really well. Would like to get it to run a little faster so she could get 2 months per submit. Which would improve her throughput a lot. Does NH have any ideas to speed it up? Seems like MOM model has 10 minutes of spare time. Is it CICE bound? NH: That might be the initialisation time. AH: Don’t MOM timings take into account initialisation? NH: Not until recently. Marshall fixed it. MW: Clocks weren’t showing MPI initialisation time, just MOM initialisation time. AH: Could be 10 minutes? MW: I would be surprised. Would guess 5 mins, less than 10. Big model, spends a lot of time on field exchange.

AH: Not compiled with AVX2. Will that help? MW: Did AVX and AVX2 test. Could see the difference, wasn’t large enough to bother making non-compatible binaries. NH: Slack me the path and I can take a look. Haven’t spent a lot of time trying to optimise it. MW: Can sometimes improve time by changing layout. GFDL tried very long tiles that means halo updates are only north/south. NH: She might have an older config. I switched to Sandybridge for efficiency. MW: I think you can get 7-8% speed up going from AVX to AVX2. AH: I advised Ruth to use broadwell due to memory requirements making for better throughput. AH: I suggested she have higher diag_steps, but RF pointed out global scalars mean she is doing daily global MPI calls. RF said doesn’t necessarily have to be this way? Could it be changed in FMS? RF: Yeah, all the diagnostic code. In many cases time average can be commuted with area average. Every timestep doing a MPP sum or MPP global sum, can do local sums on local process and call MPP sum when you need to. Could rewrite the MOM code to do cumulative average and do an instantaneous output. Sort of fudge an average. MW: Wouldn’t make much difference to speed. RF: Depends how much those global sums are hurting you if any, but it might be a single global sum acts as a synchronisation point and doesn’t really matter. MW: I told NCI MOM didn’t do collectives because they were so fast they didn’t show up in profile. So unlikely to help a lot. RF: MOM collectives are very simple. If not doing bitwise stuff, just taking a collective of one number. MW: Caveat, only tested at 0.25 deg, so can’t know for sure it is the same at 0.1 deg. Should do it because it will eventually start to bite. Could do a profile?

AH: AK only does 2 months/submit, so maybe we would all be better running a minimal config? MW: doesn’t bode well for exascale. AH: So many constraints with PBS etc. AK: Optimised for model+machine+queue constraints not for the model on its own. NH: Could just bump all the cpus by 10%? AH: You’ve got a nice sweet spot there, first try AVX2 and see if we can get the speed up we need.

MW: Vectorisation can help. AH: Would bigger tiles help? MW: So much time moving in and out of L1 cache that it doesn’t make much difference. AH: Broadwell got bigger caches? MW: Bigger L3 but 12 more cores. NH: Is she using 600s timestep without crashing? AH: Had an ice remap crash after a couple of years. AK: Unclear if that will happen again. Doing RYF, and I found once the crashes started happening they kept happening. RF: Using latest bathymetry? AK: Yes. RF: Any difference? AK: No idea. NH: I think it is generally more stable.

MW: Can play with barotropic halo. Barotropic solver has halo of 10 so it can do it’s work every 10 steps. Might be able to get some speed up by playing with that. AH: Put path to Ruth’s control directory on TWG slack channel.

NCRIS is doing a scoping study to see if it is feasible for a team of 15-20 people to support ACCESS modelling in Australia, which would be used for submission for funding from NCRIS. The meeting was to get feedback to help write the submission.

Some discussion of the experience of the meeting.

RF: MOM uses Proleptic Gregorian calendar type, but does not use the correct calendar attribute when outputting the file. It sets it as Gregorian instead. So, when using days since 01/01/0001 there is a jump in October 1582 depending on which calendar is used. Get a 2 day offset for IAF files because of this incorrect calendar attribute. Found python netCDF interface uses udunits calendars and has problems. Had to force it to proleptic gregorian to read dates correctly. Big issue when dealing with daily data. Output files need to be fixed. Could change the calendar attribute to Proleptic Gregorian or change units to be days since a year after 1582. MW: GFDL use since 1900? RF: Yes, as this is what Ferret uses.

AH: Had a lot of date issues using python. Uses date library from numpy as there is limited date range available due to nanosecond resolution. We often have to do date offsets anyway, so probably don’t see this issue as much. Should we put proleptic gregorian into MOM? MW: Shouldn’t we change the start date? RF: That is the easiest thing. There is a lot of broken software that doesn’t treat these calendars correctly. MW: should tell GFDL about this RF: Looked at the code and made some changes, but not uploaded. AK: Is MOM using the correct dates? As with coupling to CICE etc? RF: Works ok internally. AH: Arguably a bug if they’re using proleptic and not using correct attribute. RF: Yes. CMIP6 accounts for this. Checks for dates before 1582 and requires using proleptic gregorian. Future runs should have an offset of some later date.

RF: Getting huge number of messages from restoring files starting at year 0000. Restoring files on a time modulo axis and created from Ferret, which automatically treats any file with a start year of zero or one as modulo. However year zero does not exist and is incorrect. Just need to change that attribute in the restoring files, won’t make any difference to operation but save a huge number of warning messages. MW: I get 482,000 lines of errors. I would be very happy if this is fixed. MW: Someone should change those fields. RF: I don’t have access. MW: Should go and edit the public forcing fields. What specific files? AH: If you’re talking about the ACCESS-OM2 configs, NH has the most ability to change them. MW: salt_sfc_restore? RF: Yes, and temperature, chlorophyll. Anything seasonal, a restoring. MW: Anything that says “months since 0000”? AH: Yes change to 0001. RF: Anything that uses that date (zero years) can be changed. AK: Anything that isn’t JRA that isn’t multiyear? RF: Maybe runoff? Do we use the JRA runoff? The problem really is the stuff MOM reads directly, like sponges. NH: I am happy to look into this. I might be the only one with access, hope not. Have been thinking about this for a while. Changed the OASIS code as well to ensure DEBUG_LEVEL zero does not output anything. Was outputting thousands of lines. Also an Andy Hogg GitHun issue and this was the next one on my list. MW: So far I can only find salt restore and ssw shortwave. RF: Shouldn’t be using that. Should be using GFDL formulation which reads in chl.nc.

AK: Some files in those ACCESS-OM2 input tarballs that aren’t used. Should they be removed? NH: Posted on slack about this? AK: Yes, but not sure they aren’t used by someone. NH: Bit messy how this is done. Should really just have a bunch of files and grab what they need. Would save a lot space. Currently versioning sets of files rather than individual files.

AK: Some issues on GitHub. RF: Been discussing this with AH and Scott Wales. An attribute needs to be removed. AH: Biggest issue is regional outputs having incorrect dimensioning. Which has been fixed. Also fixed the unlimited dimension getting squashed. Also another issue with passing too many files on the command line due to an MPI issue. Requires a change to payu as globbing is now done internally so any glob needs to be quoted. It’s on my list of tasks.

MW: Original tool used a pattern? AH: Didn’t implement that in mppnccombine-fast, maybe we should? MW: Stopped doing that in payu to support some coupled FMS codes where tiles didn’t start at zero, but could go back to the old way. AH: Does using the pattern work with masked configs when tiles are missing? I can’t recall. MW: Not sure.

AH: AK and I went through some of the 0.1 deg output directories and found we could get significant space savings in the ice diagnostics AK: Ice outputs are not compressed, daily data is in individual files half of which is grid data. Can get a 8 fold decrease in size. Out of 20TB of total data can save 12TB of space. AH: Want to make a post processing script to run this automatically. AK: Yes, also delete all the zero length log files AH: This was to clean up for archiving. MW: payu should do this, maybe not looking in the right places. NH: FORTRAN has an option when closing a file to delete if empty, so looking into that. Also some CICE logs just have one line at the top with exactly the same text. AH: Yes we found those, matched the same number of bytes and deleting them. MW: If payu isn’t deleting zero length files not sweeping through submodes. AH: A lot tidier after cleaning. AK: Yes an hour well spent.

Date: 16th September 2018

Attendees:

MW: Taken position at GFDL. Starting 3-6 months. Need new TWG Chairman. Need to organise meetings. Not much communication with other working groups. AH: Anyone who is interested think about it, we can decide at a subsequent meeting.

MW: As I am leaving, noone left at NCI following ocean model development. NCI will appoint a new person, but RY is attending for some knowledge transfer.

AH: there is a cm2_release_candidate branch on MOM5 repository. Contains all substantive code changes from Hailin’s fork on Peter’s repo.

AH: Need a rose suite to support MOM5 compile script. Might get Scott Wales to help make the suite. MW: I might be able to help AH: Used original MOM_compile script? MW: Not sure. AH: Currently pulls in a build script from a totally different svn branch.

PD: Yes MOM5 in git repo. One of the directories (exp) has the same build script as you’re using AH. PD: I cloned your repository, copied over compile script and environment file, pressed go and it compiled. Problem at link time. Don’t have an opinion about build script being in repo. Rose suites do “blossom”. Ok to compile from command line at the moment. Can consult with AH offline.

MW: Are AH and RF happy with the code changes itself? AH: last set of changes are that crucial. Steve Griffies would have liked more atomic changes. Need to run, see if it is different, if it is, figure out how different and if it is important.

AH: Next harmonisation target is ACCESS-ESM-1.5, adding WOMBAT BGC. This will go into the main MOM5 repo. In theory will also be in the CM2 version of MOM5. It won’t be turned on, but we should check that it doesn’t make a difference to CM2 results.

AH: Seems straightforward, as MC had already put WOMBAT BGC into MOM5, but there have been some changes since then. MC: Pull 3 years of MOM5 changes into my own branch. RF: ocean_sbc is what hooks into WOMBAT. The components we’ve added in, like 10m winds and sea ice coverage is what WOMBAT wants. What we’ve got there now is compatible, except WOMBAT assumes 10m winds aren’t masked, and uses sea ice coverage to do masking. MC: Yep. RF: The way we do it, it is already masked. So might need a change to WOMBAT, or a flag. MC: does multiple masking matter? RF: if it’s multiplying by ice fraction, don’t want to multiply a second time. MC: Around the fringes? RF: No difference to open ocean or full ice coverage. RF: Pretty close to correct. Changed the interfaces. A lot of things in ocean_model can be kept in ocean_sbc. I can go with it with Aidan.

MW: Only time pressure is when adopted in CLEX? AH: No. Some people would like this to be in the ACCESS-ESM-1.5 CMIP runs. I don’t know what the politics situation is like. MC: Tilo is anxious to get control runs going ASAP. If there is a changed to a stable version he will run with it. Catia and Fabio are anxious to get extra diagnostics in for their experiments, but not central to ESM effort. Tilo will start as soon as he has his carbon cycle stuff fixed. MW: Pressure point on RF? RF: I’ll look at it. Just need to throw in a couple of the hooks into WOMBAT, but think they’re there. Should be straightforward.

AH: made a PR, link on TWG slack channel. Cherry picked out commits that seemed necessary. If make code changes please pull down latest code before submitting changes. Can delete fork if necessary and start again. RF: Yes, done that a few times.

MW: Harmonisation on track? AH: Holger is working on payu version for ACCESS-ESM-1.5. MW: CLEX specific? PD: CLEX is picking up ESM as climate model. We are all working in the same direction. Lots of non-CMIP science coming out of these models. Shouldn’t dismiss payu as something we don’t care about.

NH: Running minimal 0.1 degree config. Around 2K cores. Maybe not actual minimum, but decent compromise. Good efficiency. With dt=600s, around 5KSU/month. Models well balanced. Ice model not slowing things down and only using 350 cores. MW: sectrobin? NH: yes but probably doesn’t matter.

NH: Thanks for heads up for NCAR tripolar efficiency fix for CICE. RF: Surprised it makes a difference at low core counts. NH: Not sure it does, just wanted everyone to know it is now in the code. NCAR say they have checked they get identical results, confirmed no difference. One month in 2.5 hours with dt=600s. Can’t squeeze in 2 months/run. AK: What diagnostics? NH: Just monthly. Same as AK’s, changed daily to monthly, just in ice. AK: Currently have 3D daily prognostic fields. NH: Might slow things down a bit. Because this config is small it is nicely balanced. Fitting so much work into each ICE PE, there is more chance they are balanced. Using 8 blocks per core. AK: ndtd=3? NH: no, try with ndtd=2 to begin with, and seems to be going ok.

NH: Currently crashing off tip of Severny Island. High velocities at tip. Crashing after 14 submits (months). Surprised it took so long to crash. Done some work smoothing bathymetry. Doesn’t seem to have helped, now trying Rayleigh damping. RF: What month? NH: October RF: Is there ice there? NH: Don’t think so RF: Had a look at other months. A jet of warm salty water coming up from the south along the coast. Those sea mounts are there. NH: Almost completely levelled them. Still a dip. Cleared seamounts before and in the dip. Velocities are very high there. Highest velocities that far north by a long way. Wondering if it is an extreme situation. AH: I tried the truncate_velocity option north of a certain latitude. Didn’t work, had a temp or salt blow up, so don’t bother. MW: usually a no-no. AH: Had the same issue with MOM-SIS-01 with CORE-II NYF, same crash, same time every year. RF: Interesting that same problem with a different bathymetry. AH: Severny Island pokes a long way north, any flow coming that direction gets funnelled along the coast. Could stop crashes with Rayleigh damping at depth in small area NE of sea mounts. Steve not happy as a solution, but one small spot places ocean timestep limit on the whole global model. I think we should use Rayleigh damping if it stops this. RF, NH: Agreed.

AK: Same crashes in same location when I’ve attempted 600s timestep, so wound it back. Put Rayleigh drag in Kara Stratit NH: Yes I have those. AK: Can give some idea of scale of drag required. Also that drag might be pushing more water around the Severny Island. AH: You already have Rayleigh drag in your model? NH: yes, all of AK’s additions. Understand some of the frustration with this model. Small config, easier to run and test. Want to push timestep as far as possible. AK: Sounds like a good strategy. Though concerned by oscillations in vorticity field in shallow area south of Bearing Strait. Some sort of numerical glitch. Goes away with 450s timestep. Seem to get stuff like this when timestep is pushed up. AH: Any idea where it is coming from? AK: Not sure which terms/equations involved. Dispersion gets worse as CFL gets higher. Not sure. NH: Explore some of these things, as MOM-SIS-01 was running at 600s right? AH: Yes with Rayleigh damping. AK: Fanghua was using MOM-SIS-01 with this bathymetry, couldn’t go higher than 450s. Added damping and did a lot of work to track down issues. AH: Bathymetry has changed since then? AK: Yes, problem with ocean that shouldn’t have been. NH: Didn’t realise Fanghua used same bathymetry. AK: Similar. Would have had one full of potholes.

RF: Anyone used new bathymetry I made? Couple of cells filled also, but mostly partial cells. In bathymetry directory, added about a month ago. NH: Will try it.

NH: Want to get recent CICE changes into 6K PE model using one of AK’s restarts. Crashing with ice remap transport errors. MW: Include tripole changes? NH: yes. Also sectrobin code change (also doesn’t change answers). Experimenting with sectrobin and blocks to get a more efficient setup. MW: That is what I am running and trying to understand. If I do a git pull from yours will I expect crashes? NH: Crashes not due to code, just model instability. Tested that code doesn’t change answers. MW: Will try that.

AH: Which is the correct bathymetry file? Some discussion, turns out the new file is

/g/data3/hh5/tmp/cosima/bathymetry/topog_05_09_2018_1m_partial.nc

AK: To overcome ice crashes like that, use ndtd=3 to give ice more time. NH: You haven’t had ice remap crash since using this? AK: Correct. CFL issue, ice moving more than one grid cell per timestep. NH: Ice is going unrealistically fast, 35 m/s. MW: How does it do this? AH: Instability? NH: Yes. AK: Is sea surface slope high? RF: Diagnoses slope, derives slope assuming geostrophic properties. Not passing slope from ocean model. If you do, get checkerboard unless smoothed.

AH: Is ratio of PEs in minimal model same as for large model? NH: In 1/10 ratio is about 1:4 ice:ocean. Minimal model it is 1:5.

RF: Bugfixes found in CICE6 should be back ported. Were using the wrong mask in the EVP solver for updating the halos. Stops bit reproducibility. NH: I saw that bug list. Know where they are. Will bring them across. RF: Found different types in u and t masks (one logical, one 0/1).

MW: Latest profiling shows EVP taking most of the time, and in particular EVP halos. Wonder if these have any effectives RF: Purely a masking issue. Could be the cause of the strange stuff due to tripolar join. Only 5 lines of code. MW: Huge patch? NH: No. Not messy. This is not a big change of code.

AH: With CM2 with old versions of CICE5 with UM hooks etc. How serious an issue before back port to CM2 version? MW: Not time to go into that too far.

NH: Since CICE6 is just incremental improvement of CICE5, maybe we should use that in future?

MW: Ben arranging meeting with Team Leaders in this space. Set meeting on Nov 7. NH to be contacted? NH: I think I am going. MW: Discussing infrastructure needs for next 10 years. Would be good to have a consistent view on what is required. Meeting at a high level. MW: RY and I are going.

AH: Doing another payu training for CLEX, covering mppnccombine-fast, file tracking and ACCESS-OM2 configs, how to get them and what to do. Anyone at CSIRO interested?

MW: Will go over more profiling info on slack.

MW: Will merge latest payu versions. Can run without patching python version. AH: Yes can also run in a conda environment, which maybe tick’s portability box for NH

AH: people on payu/dev should move to payu/0.10.

PD: COSIMA meeting where harmonised code delivered. Amazing! Well done.

New:

Existing:

Date: 11th September 2018

Attendees:

Finished:

Deleted:

MW: 4 block success. 16 block didn’t work. sectrobin also didn’t work. Limited perspective on problem.

RF: blow out in time with extra blocks was halo updates. Weakness with round robin. A lot of overhead, no local comms. Maybe 8 tiles/processor might work. Marshall’s profiling showed small number of processors dominated run time. Want to minimise the maximum. That is the limiter

AH: Where are the max tiles?

RF: Seasonal ice near Hudson Bay, Sea of Okhotsk and Aleutian Islands.

MW: Nic used total CPU count less than number of blocks

RF: Could run with more, or less. MW: 80 CPUs less, could solve this.

AH: General strategy to concentrate on not assigning CPUs to the low work (blue areas) and let the high work areas take care of themselves?

RF: Only worried about slowest tile. Nice to have even distribution, but hard to achieve that in practice.

AH: Slowest tiles change over time RF: read in a map of expected ice concentration. Or have a heuristic, say weight by latitude. AH: If identify areas that do very little work, say never want to have many processors there, and free up processors for high work areas.

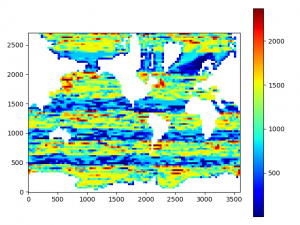

AK: There are five hot stripes and four cold stripes. Some processors have 5 blocks, some have 4. The outlying busiest ranks are on those hot stripes. If we get rid of striping with more even split, that would have maybe a spike on a lower baseline

RF: About half the processors have 5 about half have 4, request a few more PEs and that would close to balancing this issue.

NH: First attempt 1600 PEs with an even 4 blocks across all. With idealised test case Ocean was not blocking at all. Though could save a couple of hundred PEs, and there was not a big difference. However Andrew’s real world config is behaving differently. Worth going back up to 1600 and doing an even 4 or even 8 blocks. Assumed wanted everything to be even. Seemed roughly the same to have a mix. This profiling shows I was wrong.

RF: Can easily work out to get exactly 5 blocks per PE. AK: If you give me that number I can try it. NH: 5 across the board is better. Don’t want a single PE doing more work. RF: Slowest one kills you.

AH: How does the land masking affect it? A thicker stripe in NH? RF: Yes. Did I post a picture of where tiles are allocated? NH: More blocks means getting rid of more land? RF: Lose with communication cost.

NH: In order to get this working I ran into the raijin problem: messages getting lost and deadlocks. When we got 0.1 deg MOM-SIS working had issues with point to point sends and recvs, and Marshall change that to proper gather to get initialisation working. The gather inside CICE is implemented with point to point sends and recvs. Assume similar. It is doing a send for every block. MW: Andrew’s finished ok? AK: Ran with 30×35. MW: mxm might resolve this problem? NH: Resolved by putting in a barrier after all the sends, otherwise deadlocks. MW: Did you add barriers? NH: Yes to the MPI gather code. MW: Clear that CICE is heavily barriered. NH: Could implement properly with MPI_gather. MW: Caveat didn’t work with the global field. NH: Only does a global gather once when writing out restarts. Not too bad. MW: A lot of MPI ranks? NH: 1600 x number of blocks is the number of sends. MW: So number of messages, not number of ranks. MW: Only added barrier for restart? NH: Could have done that, but added in MPI_gather. Maybe that is bad? Actually didn’t add, just enabled it by defining a preprocessor flag.

AH: Is there an effect that it gets wider in the north that you’re sampling more ice in those areas?

AH: Should we pull out the slowest blocks and see where all the blocks are that contribute to the slowest processors.

RF: Correspond to areas of highest ice concentration. AH: There is ice in Okhotsk in northern summer? RF: Yes.

MW: Arctic and Antarctic are sharing work. RF: How many for this run? MW: 1385 RF: If you run with 1500 or so get an even distribution.

NH: Should decided what is the next step/run?

MW: Two options, massively increase number of blocks, but this is blowing out with comms time, or even divided 5 blocks. RF: Yes that is the one to do next.

AK: sectrobin should solve the communications issue but couldn’t get it to run. NH: Not sure if code needs to change? RF: Test on 1 degree model.

AK: First step to even up current run with 4 or 5 blocks. MW: Should confirm that many blocks is a comms problem and not a tripole issue for example. But this is a research problem.

AK: Will switch to this for 0.1 deg production as it is already better.

NH: New code 1 block per PE gives identical answers to old code. 4 blocks does not give identical answers to old code. Not sure if I should expect it to be the same. Don’t know how CICE works. In terms of coupling it should be the same if you’re coupling to individual blocks or multiple blocks. Not ruling out it should be identical and there is something going wrong. AK: What would make it non-identical? Order of summation? NH: could be something like that. MW: Might be CIE doing a layer calc before doing vertical? Have to know more about CICE. NH: might be worth looking into further so at least we know that we’re not making bugs.

AK: How would I switch to this for the production run? Not bitwise identical? Just check fields look physically reasonable? NH: Hard problem. Can’t see physical difference. Only looking at last few bits of a floating point number. MW: Did an MPI sum on a single rank and it changed the last bit. Found it running the FMS diagnostics and that is why they failed. Don’t fail at GFDL. Scary stuff. NH: Scary and time consuming.

MW: Clear strategy. Get rid of bands. Go with 1600 cores. Have a 16 block job running, will keep everyone updated.

AH: My understanding with the ESM harmonisation is that we’re close, as we haven’t yet put in the coupling changes from CM2 that you had to take out of the ESM code. PD: Dave Bi’s iceberg scheme? AH: If we get the WOMBAT code into MOM5 that would be harmonised I think. PD: Maybe Matt has a better handle?

MC: Are the OM and CM almost harmonised except for iceberg information? Are they almost the same? AH: I believe so. Once we get WOMBAT in there we’re good to go. Russ had a different idea about how to handle the case of different coupling fields.

RF: Have to get rid of ACCESS keyword. In many cases redundant. AH: ACCESS keyword can be replaced by ACCESS_CM or ACCESS_OM. RF: Yes!

RF: On CICE side of things (and probably MOM) coupling fields are currently defined as parameters. Can use calls to PRISM, test return code, put some tests for legal code/parameters for icebergs for example. Don’t need ifdef’s, can test on the fly. A lot easier than recompiling every time.

AH: How do we implement this? Put WOMBAT code in now so we have an ESM harmonised version and then deal with coupling etc as this is ACCESS-CM? RF: Want to bed down ACCESS-CM and OM harmonised first. The WOMBAT stuff will move in quite simply. I’d like to take that on, have been tasked to do this to take some of the load off Matt. Get this first step out of the way and then move on to WOMBAT and ESM. Until the first step done things can be in a state of flux.

MC: Is wind ehanced mixing in ACCESS-OM? RF: Yes. MC: FAMIP in ACCESS-OM? RF: They’re in MOM5. MC: They weren’t in ACCESS-CM code. AH: That is a 3 year old fork. MC: Can we update ESM from ACCESS-OM? AH: This morning putting WOMBAT changes into MOM5 pull request. Can grab and check if it works. MC: What is the difference in pulling from one direction to the other? AH: ESM is a 3 year old fork with little history in common with current MOM. Couldn’t code into ESM would be too difficult. Cherry picked your changes into the MOM5 code, but wouldn’t work the other way. Will lease with Russ to get ACCESS-CM changes.

AH: Would WOMBAT always be part of MOM5-SIS. MW: Is it big? RF: No, very small. MW: Let’s leave it in MOM5. Just executable bloat. RF: Just a few fields. MC: Allocated, so if not turned on, then no issues. RF: WOMBAT wants the 10m waves, but we need that for the wave mixing as well.

AH: ACCESS-OM no longer compiles because you need libaccessom2 as well. NH: Same before. Always needed OASIS. AH: I’ve got CM compiling by pulling in OASIS and make it. All the compilation tests are passing. Could pull in the libaccessom2 and compile in a similar way to ACCESS-CM. There is no old ACCESS-OM build anymore. It is ACCESS-OM2. MW: Do we want to do this external to the repo? AH: Nice to have the tests there and passing. OM now has different driver code to CM, so can’t be sure you’ve done it properly without an ACCESS-OM compilation test. NH: There always needs to be a dependency on a coupler. libaccessom2 is more than a coupler. Maybe some of it is undesirable. Not worse than having a dependency on OASIS. AH: Just wanted to make sure there wasn’t an ACCESS-OM that was independent of libaccessom2. MW: Can you provide libaccessom2 as a binary and headers? AH: Yes, that is a possibility. NH: Could just be a .a file. MW: that is how you handle dependencies, as a binary, like libc. MW: Do you call OASIS in MOM? NH: Yes. In yatm don’t directly call OASIS. Could change coupler in future without changing models. MW: No problem with wrapping OASIS. AH: Can do the same thing I did with CM, pulled in OASIS, built it. Pretty straightforward.

New:

Existing:

Date: 14th August 2018

Attendees:

Peter Dobrohotoff (CSIRO Aspendale) gave his apologies that he couldn’t attend.

MW: Fixed constellation of bugs. 1/10th still not working under MPI, looks like a new issue. How do I approach getting this code into respositories? Not invested, but want working in long term. Intel v18 has a bug, fixed, but found another. Dale has built an OpenMPI3 library built using Intel v17. Do people want to use it? Or afraid another issue?

AH: What advantages? MW: Hangs in MPI_Init, commsplit, and hangs at random time steps. MXM seems to have solved random hangs. First two still happening. Still getting random fails during initialisation. Betting on newer library to solve them. Do we want to invest in new libraries and hope we get new solutions, or happy with status quo?

AH: Is there another way? Maybe dev branch on MOM5, submit to more testing? MW: Yes. I can add all build stuff to all repos, independent on NCI configs. Optionally turn them on over time. AH: Just using different versions of OpenMPI? MW: Yes, have my FMS changes as well. AH: FMS changes in master branch? MW: Could do multiple ways. FMS not been updated to GFDL version. Could have subtree/submodule or just dump in. Will change everyone’s code, so might not be solution. I want people to start testing soon. AH: The init hangs: critical core count when these occur? MW: Yes. Tenth gets them, not at 1 degree. AH: Don’t have these at a quarter either? MW: don’t run 1/4, but frequency of errors increases with cores. AH: If we can test this by running the model for a single time step lots of times to quantify issue, see how bad it is, and see if we get improvement. MW: Have not seen commsplit hang with newer versions of OpenMPI. AH: Do you get hangs AK? AK: Get hangs at initialisation about 10% of time. Since going to YATM not had these issue. Also much more consistent with timing. Was 1.5-2.5 hours/month. Now 4h5m-4h10m for 3 month submit. MW: When we studied variability always IO issues. AK: Don’t know what is behind it. AH: Has coincided with transition to YATM? AK: Yes.

AH: Just got to a point where the model is running at all. AK: By late today will have 1 year of IAF. Need capacity to shift to new MPI versions. MW: Concerned about next machine. OpenMPI 1.0 will not be available. Not concerned short term. Concerned long term and stability issues. AK: Yet to fail

MW: Just updating. Want a plan. Will submit build changes to all the projects. Will also replace FMS with submodule but no change in code, but can be changed when required. AH: with submodule can easily test changes. Need a plan to for implementation, testing, check timings.

NH: YATM makes sure it does all it’s work before ICE asks for anything. Does reads and regridding and waiting until required. Would hide any jitter in this disk access. AK: Preemptive fetching data. MW: Good case for IO servers.

AH: We all good now? This fixed? AK: Yes. Just had to tell it to ignore the date in ice restart for the first one. AH: We have a method for this strange restart? Will we need to do this again? NH: Will happen any time you want to use someone else’s restart and don’t want to use their calendar. AH: Are there code changes we need to support this? AK: No. One off thing. Just need change a value in CICE restart and then change back again. AH: Kial got burnt with this once. MW: We could do this at payu level. NH: Little more to it. Also necessary to MOM and YATM date. AK: 3 or 4 things I needed to do to make it go. AH: Think if we need to streamline this? NH: To begin with just document on wiki. AK: I can put it up there.

AH: Fixed?

RF: Should not be able to do an ACCESS-OM run but not do langmuir mixing unless using u10 calculate from empirical formula. Won’t break current ACCESS-OM runs. Have to look into CICE5 and work out how to get winds into ACCESS-OM. ACCESS-CM was fine. OM I thought there was a option to pass winds, misread a preprocessor flag.

RF: A couple of other issues. The order it passes the fields between ice and ocean, the 10m winds are 22nd, another one at 21 which isn’t used. They can’t be done as a common thing. Strange code. The changes I have done will make it safe for the time being. Have to explicitly compile you want to use the winds. AH: Under what circumstances can you use langmuir in ACCESS-OM? RF: Can use MOM6 style to calculate 10m winds. Need to turn on another namelist option. Currently don’t pass winds in OM models. AH: If want to use langmuir do you need to also set the compile flag? RF: At moment can’t pass 10m winds from cice to MOM. Defaults will be fine. ACCESS wind preprocessor flag is for ACCESS CM. ACCESS wind flag is a placeholder at the moment. All allocations made no matter what. Problem all allocations no matter what. Currently initialised to zeroes. AH: A placeholder for the future when get winds through CICE? RF: Yes. Would like to figure out how they pass 10m winds in fully coupled model, and whether they mask them with ice or not. Currently not clear. Would like to make them compatible. AH: I will put in those changes, add new changes and submit a PR. MW: Turn off langmuir by default? It’s broken? RF: No, can calculate winds in MOM6 style. Not using this at the moment.

AH: Above bug brought home issue of testing on MOM5 repo. Currently have 3 targets, MOM-SIS, ACCESS-OM, ACCESS-CM. This got through beause I only tested MOM-SIS. NH: There is a jenkins setup which runs every MOM6 test case. Can’t remember if it has ACCESS-OM, ACCESS-CM builds/test cases. Spent a lot of time to set up testing but it takes maintenance work. Doesn’t run periodically. Love the idea of testing MOM5, please look at what I have already done. Like the idea of production ready, but it takes effort to maintain the system, might not be justified by the number of tests we have to run. If we had weekly PRs would make sense. If infrequent need to revisit the testing every time. AH: Idea was to do some simple builds. MW: build tests on travis? AH: Yes. MW: Don’t have to run, just build. Nic did a lot of work to do runs.

NH: Periodically: ACCESS-OM2 build test, and a fast run test (1 day experiment). There is a lot of stuff being done for MOM5, no build test. MW: Is this the GFDL tests? AH: Nic runs the GFDL tests. NH: MOM5 runs not run as frequently. Not maintained, going red. Sure if something simple, maybe not worth doing on Jenkins. But definitely take a look? MW: Travis for commits? Weekly Jenkins runs for commits. AH: can see five MOM5 builds. NH: folder mom-ocean.org on Jenkins. MOM6 guys get a lot of value from it. AH: will take a look. NH: If you can’t change anything let me know.

MC: Didn’t work with https. AH: Made an ESM1.5 repo on OceansAus for Matt to upload MOM5+Wombat code. Pretty much frozen. Peter wanted somewhere to put this. Should it be possible to https? RF: Always had to use ssh. NH: Just need to put in password. MW: https should work, help to know error. AH: give it another crack. Complain on slack. Get on slack.

AH: ESM1.5 repo on OceansAus won’t change much (now frozen), but we have goal of getting WOMBAT code into main MOM5 repo. MC: Might do that in parallel. Who knows what will happen to ESM1.5. Depends on where investment with ACCESS-CM investment goes. ACCESS-CM2 is quite expensive. In the process of putting WOMBAT into ACCESS-OM-1.0. Going through steps. Put WOMBAT into it and submit PR. AH: ESM1.5 is just MOM+ Wombat? AH: We’re doing the harmonisation, so MOM5 master will have all the important changes. Once we have WOMBAT we have an ESM1.5 equivalent. ESM1.5 will be workhorse coupled model for CoE because ACCESS-CM2 is too expensive. Whilst ESM1.5 on OceansAus will be the canonical version, the MOM5 repo will be effectively the same but can included updates to diagnostics etc.

MC: Checked out ACCESS-OM2-1.0, checked out, compiled, but falling over on running payu. Config file has changed a lot since I last used. Want to run a 1 degree RYF model as basis. MW: Is Matt using the version that isn’t working? Is that what Matt is dealing with? AH: Matt, get on slack and let us know your issues, and we’ll get you going. AK: looking for a working setup? MC: Yes, 1 deg JRA RYF. AK: Can point you to working config. MC: A month since I cloned. AK: Yeah, need to update.

AK: Asking for just config? MC: Yes, but any information useful. AH: Kial has a lot of configs. I cloned one and changed exe paths and was up and running very quickly. MC: I cloned and built, but when I checked out the config it was pointing to common shared exes. AK: should change that. NH: Maybe documentation is out of date. Should follow the simple “if you’re a raijin user” instructions. MC: Yes mostly worked. AH: Get on slack! MC: Browser is out of date. NH: If you do it again, follow the quickstart for raijin users instructions. If that doesn’t work we need to fix stuff. MW: a lot of use problems we don’t know about. We have to think about students who will be coming to run this. If Matt can’t figure it out there is no hope. AK: there is a lot that needs to be updated for the more complex instructions. Also the configurations in control are not what they’re currently using. Could fix that easily.

AH: Andrew has issues with a ‘latest’ directory that has symlinks that point to most recent version. AK: Common use case is perturbation experiments. Go back to previous restart and branch a new experiment, but need to know what forcing was used. Rather than latest, have a directory which is named for the date it was setup, or date forcing was updated. If and when things are changed, make another one. All softlinks. AH: One good thing about latest is you have a config that always works with most recent version. If you have a config with latest, they start a new model and they can be confident that it works. AK: No problem extending forcing, only an issue if old forcing files change. AH: they have versioning issues with CMIP5, have a database. NH: latest is not reproducible. Experiment I ran, but latest is changed. Problem with old system, every version jumbled in one directory. At times there were different variables which had different versions. Not all variables had the same version. AH: That is correct. NH: If there is a single directory that has all the variables for that version that is fine. AH: some cases the variables don’t have the same version. I agree this is an issue, but best solved with manifests in payu. MW: filename is not a good system. Filenames change and hashes don’t. AH: If someone has a naming scheme they want, then happy to implement it, but will keep latest, and solve using manifests. NH: was there a reason to put all versions in same directory? AH: the way the JRA55 people publish it.

AK: Do we care if JRA forcing is extended? Does it affect reproducibility? NH: Not an issue. YATM has no end date for an experiment. You set a forcing start/end date, so no problem.

RF: Pavel Sakov is running a KDS75 MOM only on OFAM -75/+75 tenth model. Running 600s timestep from the start, hoping to get up to 900s. The problems in global model is not between +-75. NH: Just poles messing us up. RF: From a flat surface, huge heave. NH: all those little grid boxes. AK: Yes the tripole is the issue. AH: redo bathymetry? RF: did a naive regridding, some issues, potholes etc. Still works. Will be running a 100 member ensemble. AH: What is he trying to find out? RF: look at some issues with OFAM/BRAN/OceanMaps. Interested to see how much is due to vertical resolution. Also a test for the future. An intermediate model between what we run at the moment, and what Andrew is running. MC: interested in a figure from Kial at the COSIMA meeting, showing how variability changes with surface resolution. AH: how long will he run? RF: A year or two. Thought you might be interested.

New:

Existing:

Date: 10th July 2018

Attendees:

New:

Existing: