Minutes

Date: 11th September 2018

Attendees:

- Marshall Ward (MW) (Chair), NCI

- Aidan Heerdegen (AH) and Andrew Kiss (AK), CLEX ANU

- Russ Fiedler (RF), Matt Chamberlain (MC), CSIRO Hobart

- Nic Hannah (NH) Double Precision

- Peter Dobrohotoff (PD), CSIRO Aspendale

Clean up Actions list

Finished:

- Incorporate RF wave mixing update into MOM5 codebase + bug fix (AH)

- Code harmonisation updates to ACCESS and ESM meetings (PD, RF)

- Check red sea fix timing is absolute, not relative (AH)

- MW liase with AK about tenth model hangs (AK, MW)

- Profile ACCESS-OM2-01 (MW)

Deleted:

- Follow up with Andy Hogg regarding shared codebase (MW)

- Nudging code test case (RF)

CICE in ACCESS-OM2

MW: 4 block success. 16 block didn’t work. sectrobin also didn’t work. Limited perspective on problem.

RF: blow out in time with extra blocks was halo updates. Weakness with round robin. A lot of overhead, no local comms. Maybe 8 tiles/processor might work. Marshall’s profiling showed small number of processors dominated run time. Want to minimise the maximum. That is the limiter

AH: Where are the max tiles?

RF: Seasonal ice near Hudson Bay, Sea of Okhotsk and Aleutian Islands.

MW: Nic used total CPU count less than number of blocks

RF: Could run with more, or less. MW: 80 CPUs less, could solve this.

AH: General strategy to concentrate on not assigning CPUs to the low work (blue areas) and let the high work areas take care of themselves?

RF: Only worried about slowest tile. Nice to have even distribution, but hard to achieve that in practice.

AH: Slowest tiles change over time RF: read in a map of expected ice concentration. Or have a heuristic, say weight by latitude. AH: If identify areas that do very little work, say never want to have many processors there, and free up processors for high work areas.

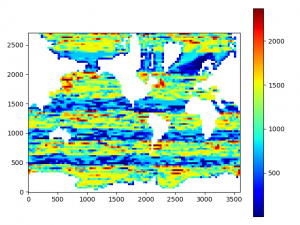

AK: There are five hot stripes and four cold stripes. Some processors have 5 blocks, some have 4. The outlying busiest ranks are on those hot stripes. If we get rid of striping with more even split, that would have maybe a spike on a lower baseline

RF: About half the processors have 5 about half have 4, request a few more PEs and that would close to balancing this issue.

NH: First attempt 1600 PEs with an even 4 blocks across all. With idealised test case Ocean was not blocking at all. Though could save a couple of hundred PEs, and there was not a big difference. However Andrew’s real world config is behaving differently. Worth going back up to 1600 and doing an even 4 or even 8 blocks. Assumed wanted everything to be even. Seemed roughly the same to have a mix. This profiling shows I was wrong.

RF: Can easily work out to get exactly 5 blocks per PE. AK: If you give me that number I can try it. NH: 5 across the board is better. Don’t want a single PE doing more work. RF: Slowest one kills you.

AH: How does the land masking affect it? A thicker stripe in NH? RF: Yes. Did I post a picture of where tiles are allocated? NH: More blocks means getting rid of more land? RF: Lose with communication cost.

NH: In order to get this working I ran into the raijin problem: messages getting lost and deadlocks. When we got 0.1 deg MOM-SIS working had issues with point to point sends and recvs, and Marshall change that to proper gather to get initialisation working. The gather inside CICE is implemented with point to point sends and recvs. Assume similar. It is doing a send for every block. MW: Andrew’s finished ok? AK: Ran with 30×35. MW: mxm might resolve this problem? NH: Resolved by putting in a barrier after all the sends, otherwise deadlocks. MW: Did you add barriers? NH: Yes to the MPI gather code. MW: Clear that CICE is heavily barriered. NH: Could implement properly with MPI_gather. MW: Caveat didn’t work with the global field. NH: Only does a global gather once when writing out restarts. Not too bad. MW: A lot of MPI ranks? NH: 1600 x number of blocks is the number of sends. MW: So number of messages, not number of ranks. MW: Only added barrier for restart? NH: Could have done that, but added in MPI_gather. Maybe that is bad? Actually didn’t add, just enabled it by defining a preprocessor flag.

AH: Is there an effect that it gets wider in the north that you’re sampling more ice in those areas?

AH: Should we pull out the slowest blocks and see where all the blocks are that contribute to the slowest processors.

RF: Correspond to areas of highest ice concentration. AH: There is ice in Okhotsk in northern summer? RF: Yes.

MW: Arctic and Antarctic are sharing work. RF: How many for this run? MW: 1385 RF: If you run with 1500 or so get an even distribution.

NH: Should decided what is the next step/run?

MW: Two options, massively increase number of blocks, but this is blowing out with comms time, or even divided 5 blocks. RF: Yes that is the one to do next.

AK: sectrobin should solve the communications issue but couldn’t get it to run. NH: Not sure if code needs to change? RF: Test on 1 degree model.

AK: First step to even up current run with 4 or 5 blocks. MW: Should confirm that many blocks is a comms problem and not a tripole issue for example. But this is a research problem.

AK: Will switch to this for 0.1 deg production as it is already better.

NH: New code 1 block per PE gives identical answers to old code. 4 blocks does not give identical answers to old code. Not sure if I should expect it to be the same. Don’t know how CICE works. In terms of coupling it should be the same if you’re coupling to individual blocks or multiple blocks. Not ruling out it should be identical and there is something going wrong. AK: What would make it non-identical? Order of summation? NH: could be something like that. MW: Might be CIE doing a layer calc before doing vertical? Have to know more about CICE. NH: might be worth looking into further so at least we know that we’re not making bugs.

AK: How would I switch to this for the production run? Not bitwise identical? Just check fields look physically reasonable? NH: Hard problem. Can’t see physical difference. Only looking at last few bits of a floating point number. MW: Did an MPI sum on a single rank and it changed the last bit. Found it running the FMS diagnostics and that is why they failed. Don’t fail at GFDL. Scary stuff. NH: Scary and time consuming.

MW: Clear strategy. Get rid of bands. Go with 1600 cores. Have a 16 block job running, will keep everyone updated.

Code Harmonisation

AH: My understanding with the ESM harmonisation is that we’re close, as we haven’t yet put in the coupling changes from CM2 that you had to take out of the ESM code. PD: Dave Bi’s iceberg scheme? AH: If we get the WOMBAT code into MOM5 that would be harmonised I think. PD: Maybe Matt has a better handle?

MC: Are the OM and CM almost harmonised except for iceberg information? Are they almost the same? AH: I believe so. Once we get WOMBAT in there we’re good to go. Russ had a different idea about how to handle the case of different coupling fields.

RF: Have to get rid of ACCESS keyword. In many cases redundant. AH: ACCESS keyword can be replaced by ACCESS_CM or ACCESS_OM. RF: Yes!

RF: On CICE side of things (and probably MOM) coupling fields are currently defined as parameters. Can use calls to PRISM, test return code, put some tests for legal code/parameters for icebergs for example. Don’t need ifdef’s, can test on the fly. A lot easier than recompiling every time.

AH: How do we implement this? Put WOMBAT code in now so we have an ESM harmonised version and then deal with coupling etc as this is ACCESS-CM? RF: Want to bed down ACCESS-CM and OM harmonised first. The WOMBAT stuff will move in quite simply. I’d like to take that on, have been tasked to do this to take some of the load off Matt. Get this first step out of the way and then move on to WOMBAT and ESM. Until the first step done things can be in a state of flux.

MC: Is wind ehanced mixing in ACCESS-OM? RF: Yes. MC: FAMIP in ACCESS-OM? RF: They’re in MOM5. MC: They weren’t in ACCESS-CM code. AH: That is a 3 year old fork. MC: Can we update ESM from ACCESS-OM? AH: This morning putting WOMBAT changes into MOM5 pull request. Can grab and check if it works. MC: What is the difference in pulling from one direction to the other? AH: ESM is a 3 year old fork with little history in common with current MOM. Couldn’t code into ESM would be too difficult. Cherry picked your changes into the MOM5 code, but wouldn’t work the other way. Will lease with Russ to get ACCESS-CM changes.

AH: Would WOMBAT always be part of MOM5-SIS. MW: Is it big? RF: No, very small. MW: Let’s leave it in MOM5. Just executable bloat. RF: Just a few fields. MC: Allocated, so if not turned on, then no issues. RF: WOMBAT wants the 10m waves, but we need that for the wave mixing as well.

Travis CI on MOM5

AH: ACCESS-OM no longer compiles because you need libaccessom2 as well. NH: Same before. Always needed OASIS. AH: I’ve got CM compiling by pulling in OASIS and make it. All the compilation tests are passing. Could pull in the libaccessom2 and compile in a similar way to ACCESS-CM. There is no old ACCESS-OM build anymore. It is ACCESS-OM2. MW: Do we want to do this external to the repo? AH: Nice to have the tests there and passing. OM now has different driver code to CM, so can’t be sure you’ve done it properly without an ACCESS-OM compilation test. NH: There always needs to be a dependency on a coupler. libaccessom2 is more than a coupler. Maybe some of it is undesirable. Not worse than having a dependency on OASIS. AH: Just wanted to make sure there wasn’t an ACCESS-OM that was independent of libaccessom2. MW: Can you provide libaccessom2 as a binary and headers? AH: Yes, that is a possibility. NH: Could just be a .a file. MW: that is how you handle dependencies, as a binary, like libc. MW: Do you call OASIS in MOM? NH: Yes. In yatm don’t directly call OASIS. Could change coupler in future without changing models. MW: No problem with wrapping OASIS. AH: Can do the same thing I did with CM, pulled in OASIS, built it. Pretty straightforward.

Actions

New:

- Create even 5 blocks per PE map for CICE (RF)

- Get coupling changes into MOM for harmonisation (RF+AH)

Existing:

- Update model name list and other configurations on OceansAus repo (AK)

- Shared google doc on reproducibility strategy (AH)

- Pull request for WOMBAT changes into MOM5 repo (MC, MW)

- Compare out OASIS/CICE coupling code in ACCESS-CM2 and ACCESS-OM2 (RF)

- After FMS moved to submodule, incorporate MPI-IO changes into FMS (MW)

- Incorporate WOMBAT into CM2.5 decadal prediction codebase and publish to Github (RF)

- Move FMS to submodule of MOM5 github repo (MW)

- Make a proper plan for model release — discuss at COSIMA meeting. Ask students/researchers what they need to get started with a model (MW and TWG)

- Blog post around issues with high core count jobs and mxm mtl (NH)

- Look into OpenDAP/THREDDS for use with MOM on raijin (AH, NH)

- Add RF ocean bathymetry code to OceansAus repo (RF)

- Add MPI barrier before ice halo updates timer to check if slow timing issues are just ice load imbalances that appear as longer times due to synchronisation (NH).

- Redo SSS restoring with patch smoothing (AH)

- Get Ben/Andy to endorse provision of MAS to CoE (no-one assigned)

- CICE and MATM need to output namelists for metadata crawling (AK)

- Provide 1 deg RYF ACCESS-OM-1.0 config to MC (AK)

- Update ACCESS-OM2 model configs (AK)